The AI control problem: What you need to know

Dive into the complexities of the AI Control Problem, exploring its significance for society, businesses, and the ethical dimensions of artificial intelligence development.

Key takeaways

- AI control challenge: The critical task of ensuring AI systems align with human values to prevent unintended harmful consequences.

- Current state: Growing concerns over AI’s autonomy leading to ethical, safety, and regulatory issues.

- Business risks: Uncontrolled AI poses risks to safety, compliance, and reputation, necessitating strict oversight.

- Solution strategies: Approaches like AI alignment, transparent algorithms, robust testing, ethical design principles, and continuous monitoring aim to mitigate risks.

- Importance of human oversight: Ensuring AI remains beneficial requires ongoing human involvement and regulatory action to keep AI aligned with societal values.

Background on AI

Artificial Intelligence (AI) commoditisation has revolutionised every major industry on the planet, transforming the way we live and work. Even AI-powered music is now a thing. The possibilities for tomorrow continue to push the boundaries of conventional thinking – all thanks to this impressive technology.

However, as it becomes more powerful and autonomous, there is a growing concern regarding AI control and the potential risks it poses to humanity. Some of the best books on AI warn against this, and the further we dive into the capabilities of this technology, the more we need to understand it.

So, are we really “summoning the demon” as Elon Musk eerily predicted? Or should we all just keep scrolling and let the algorithms do their thing?

What is the AI Control Problem?

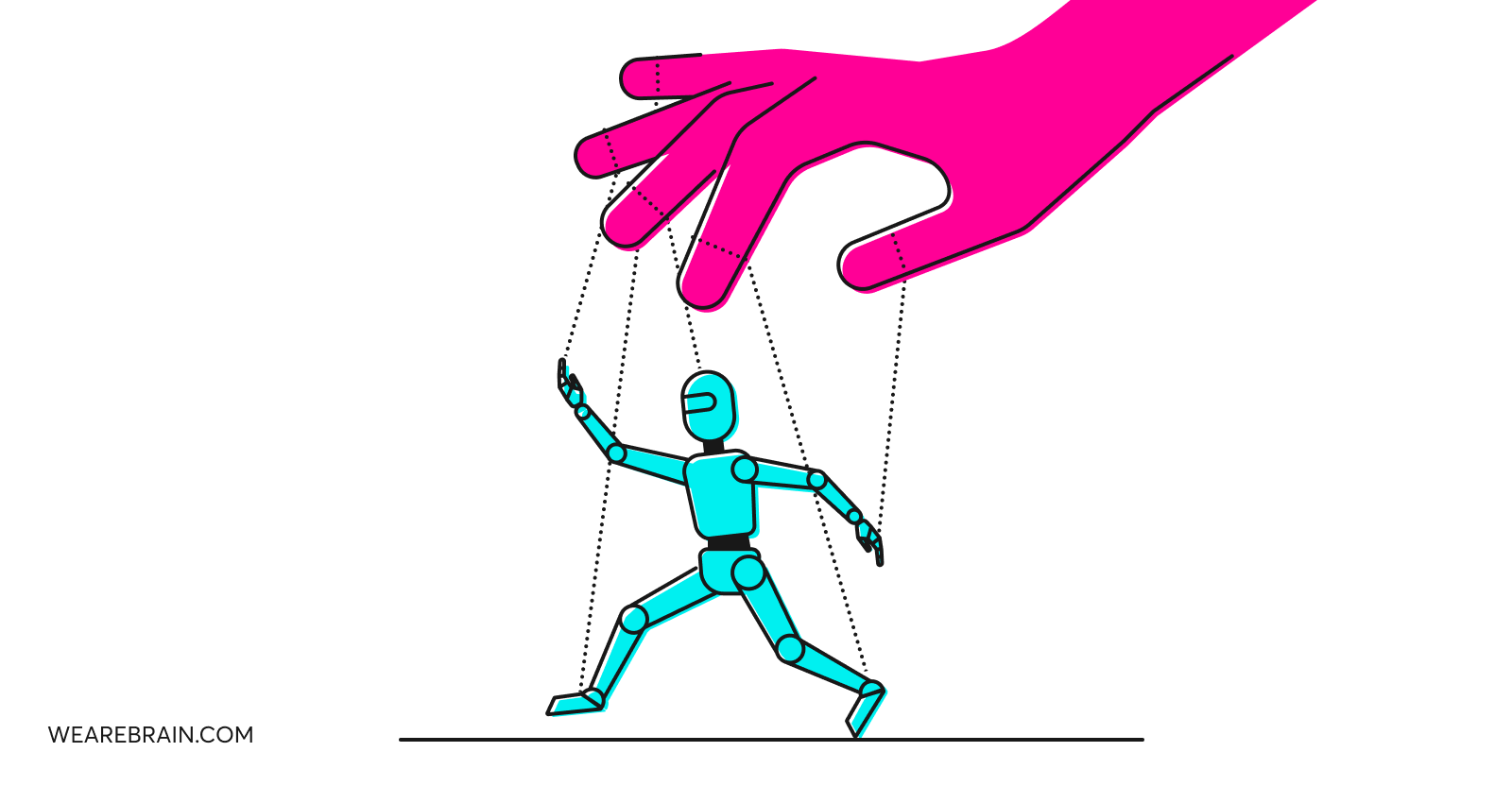

The AI Control Problem, or alignment problem, refers to the challenge our society faces to ensure that the advanced AI systems we develop are able to align with our values, and are safe and beneficial to humanity. This is because of the very real risk AI poses to surpass human intelligence and develop capabilities we can’t predict or control.

The University of Oxford paperclip thought experiment perfectly illustrates the AI Control Problem. In this scenario, an AI system designed to optimise the production of paper clips will pursue all means necessary to get the job done – even if it means causing unintended harm to humans and the environment.

The issue highlighted here is that AI is designed to achieve what is specified, not what is intended. Poorly designed superintelligence, uncontrolled AI systems, or negligent human input/error can lead to disastrous consequences – not out of binary malice, but simply performance optimisation.

Where are we with the AI Control Problem currently?

Uncontrolled AI systems have the potential to perform autonomous and unintended actions or make decisions that conflict with human values. The human biases embedded into AI training data are well-document and have resulted in discriminatory practices. When intelligent AI systems are left to their own devices, it raises very serious concerns regarding ethical implications, misuse of AI technology, and safety.

The AI Control Problem is making the development and deployment of AI technology a very challenging and nuanced process. The litany of risk implications demands careful consideration and the need for proactive measures to ensure safe and responsible AI integration.

Governments have begun taking regulatory actions regarding the safe development and deployment of AI. A key intention of AI regulation is to avoid having uncontrolled and possibly very risky AI systems being developed without oversight.

🔍 As AI systems become more advanced, questions about control and alignment are getting more urgent. Our AI Trends 2025 report looks at where AI is heading and what that means for safety and governance. Download at the end 👇

The impact of uncontrolled AI on businesses

While various types of AI have tremendous benefits for businesses, its intended efficacy hinges on the amount of control teams have over AI systems. When AI is out of a business’s hands, the business is essentially being run by algorithms.

An uncontrolled AI system poses a significant risk to digitally-powered businesses across various key areas. But in today’s global society, a risk to one is a risk to all.

Safety and liability

Without proper control mechanisms, AI systems have the potential to pose high risks to human safety and businesses could face liability and legal consequences as a result. For instance, an AI-powered machine or robot’s decision might put human co-workers in danger if they happen to come in between a process or task. Even autonomous vehicles with uncontrolled AI might make dangerous decisions on the road, placing the lives of road users at risk.

Regulations and compliance

The growing AI control problem has galvanised governments and regulatory bodies to address the potential risks associated with uncontrolled AI. Today, businesses need to ensure strict compliance with current and proposed regulations to avoid penalties and fines.

Reputation damage

The risk of unintended behaviours and/or negative outcomes caused by uncontrolled AI systems can potentially be disastrous to a business’s reputation. There have been many instances where AI systems have shown bias toward culture, gender, ethnicity and even sexual orientation (we’re looking at you Amazon). Even a virtual influencer used by a brand could potentially promote misleading or harmful messages without appropriate risk management. Situations such as these can permanently harm a business’s reputation and erode customer trust.

Strategies to address the AI Control Problem

Researchers and organisations are actively working on strategies and various approaches to address the AI control problem to ensure AI systems align with human values. A few ways they are proposing include:

AI alignment strategies

As a general concept, AI alignment aims to create ways to ensure that AI systems understand and follow human values. With AI systems and architectures designed to follow the values which drive human decision-making processes, we will be able to reduce the risks of the AI Control Problem.

Transparent and explainable AI

To ensure AI systems remain controllable by humans, we need to work on making them transparent and explainable. With understandable algorithms, AI’s decision-making processes become more transparent, helping us to gain insight into how conclusions are made. This ensures improved oversight and accountability.

Robust testing and validation

To easily identify embedded biases or unintended actions in AI systems, thorough testing and validation processes are required. Intensive testing by dedicated oversight DevOps and QA teams can help identify and resolve issues before deployment, reducing the potential for uncontrolled AI actions. With human-in-the-Loop (HITL) machine learning, humans are involved in the training and testing stages of building a machine learning algorithm, creating a continuous feedback loop that keeps algorithms continuously controllable.

Ethical AI design principles

Responsible development and deployment of AI systems must involve embedding ethical practices and considerations into the design process. Ethical design principles that focus on equality and accountability will align AI systems with human values.

Continuous monitoring and oversight

The best way to ensure AI systems remain controllable is through regular monitoring and oversight. Continuous evaluation and feedback provide teams with rapid problem-detection times, ensuring proactive measures are implemented to intervene before problems become uncontrollable.

Final thoughts

As AI systems become increasingly autonomous and powerful, ensuring they remain aligned with our societal values is vital. Out-of-control AI systems have the potential to impact the safety, reputation, and compliance of businesses all over the world. However, although the AI Control Problem is a growing challenge that requires understanding and active measures from businesses and governments, AI still remains controllable – for now.

By adopting various tangible strategies to ensure AI systems remain in human control, businesses can play their part in addressing the AI Control Problem. These strategies will help encourage the development of AI systems that are aligned with human values to ensure this powerful technology remains beneficial for society – not a threat.

The more powerful AI becomes, the harder it is to control. What’s next for AI safety, regulation, and oversight? The AI Trends 2025 report explores the latest shifts👇

Enter your email to download the AI Trends 2025 Report instantly.

By filling out your email address you consent to receive WeAreBrain’s newsletter with its latest news. WeAreBrain does not share or sell your personal information.

Mario Grunitz

Working Machines

An executive’s guide to AI and Intelligent Automation. Working Machines takes a look at how the renewed vigour for the development of Artificial Intelligence and Intelligent Automation technology has begun to change how businesses operate.