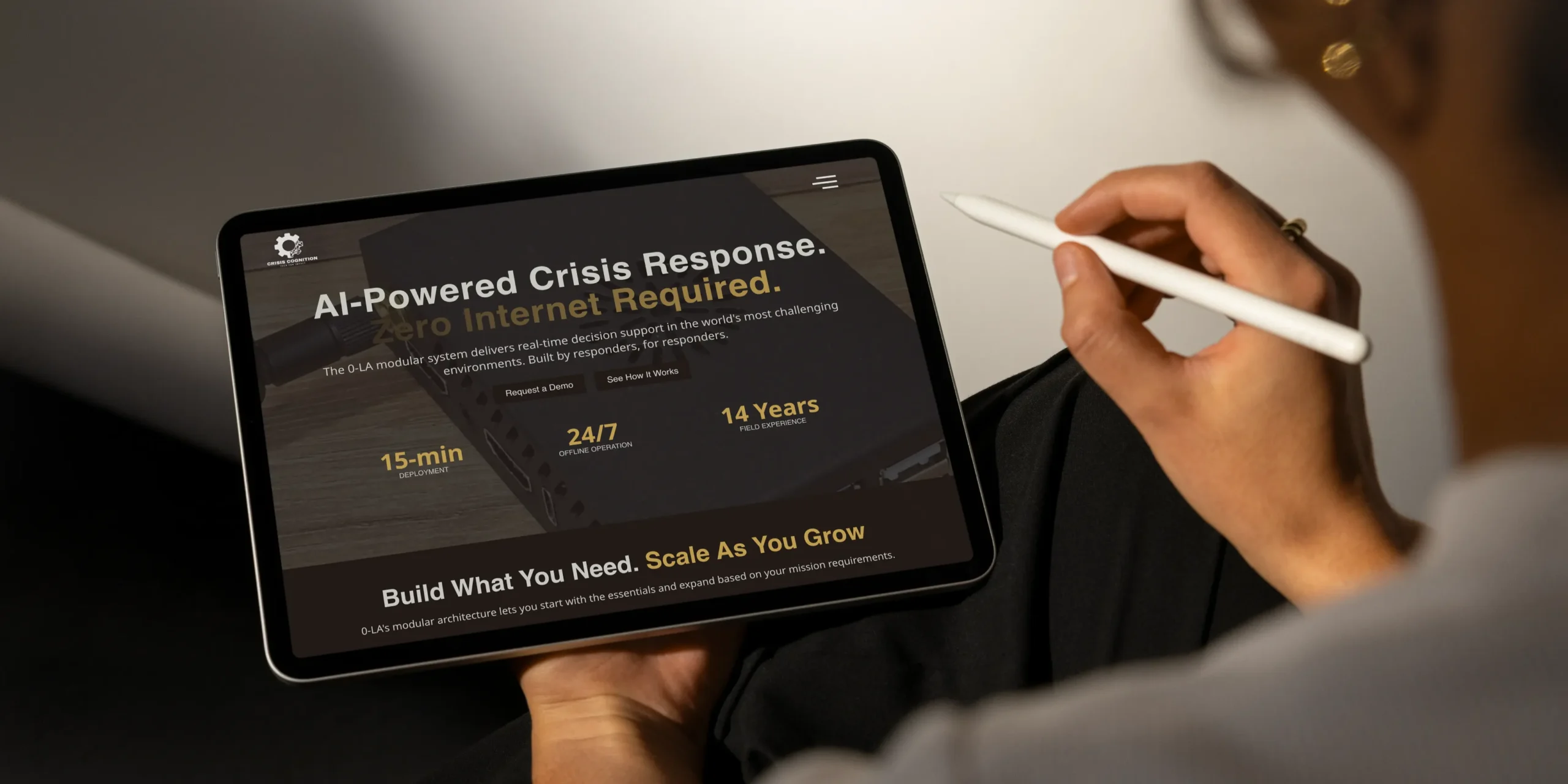

Offline AI prototype for crisis response, built for Crisis Cognition

TLDR

WeAreBrain built the first offline AI prototype for Crisis Cognition. It runs locally on low-power hardware, supports field teams without internet access, and proves that advanced language models can operate in fully disconnected conditions.

The challenge

Crisis response teams often work in places where connectivity is unreliable or missing. When networks fail, cloud tools stop working and decision making slows. Crisis Cognition aims to solve this by developing an AI assistant that continues to operate offline, giving responders fast and reliable support in difficult environments.

To turn this concept into a working system, Crisis Cognition partnered with WeAreBrain. Our team delivered the first operational prototype of their offline assistant. The prototype confirms that high-quality language models can run locally with low latency and without cloud dependence. It establishes a strong base for field pilots and future iterations.

Crisis Cognition contributes deep field insight and a mission focused on response effectiveness. We provided the engineering required to make the idea practical. Together we built a system designed to help teams act quickly and stay informed when communication lines fail.

Step-by-step process

1. Local network setup

We configured the Orange Pi 5 Max as a Wi-Fi Access Point. This allows phones, tablets, and laptops to connect directly without external infrastructure. It supports use in remote or disrupted areas.

2. Captive portal

A Captive Portal ensures that any connected device is automatically directed to the Open WebUI interface. This removes setup friction and lets responders begin using the assistant immediately.

3. End-to-end integration

The final system operates as a self-contained unit. The model runs locally, the UI loads over the device’s Wi-Fi network, and the user can issue queries without internet access or cloud services.

This prototype demonstrates that offline AI is feasible on compact, low-power hardware. It supports Crisis Cognition’s aim to equip responders with decision support tools they can rely on when infrastructure breaks down.

The result

The prototype confirms the following:

- LLMs can run reliably on the RK3588 NPU.

- Offline inference is viable for crisis environments.

- A fully self-contained AI assistant can operate without cloud services.

- Low-latency responses are achievable within hardware limits.

- Non-technical users can access the system through a simple UI.

For a detailed architecture overview or to explore potential collaboration, contact WeAreBrain.

If you represent an NGO or a humanitarian support organisation, reach out to the team at Crisis Cognition.

Technology and hardware used

The prototype needed to run AI models without internet access, handle local inference, and remain reliable under limited power or unstable conditions. We selected hardware and software that support these requirements.

1. Hardware

We used the Orange Pi 5 Max single-board computer with the RK3588 processor and integrated NPU. This hardware delivers efficient on-device inference for language models. A Micro SD card was added for local storage of model files and operational data.

2. Operating system

The device runs Armbian OS, a lightweight Linux distribution optimised for ARM-based boards. It provides stability, predictable performance, and a good base for further customisation.

3. LLM runtime and model

To execute the model locally, we used rkllm, a runtime designed for the RK3588 NPU. We extended this runtime in our own repository.

The selected model is Qwen2.5-3B-Instruct, optimised for this chip. It offers a good balance between accuracy, speed, and memory use. The model responds quickly even when hardware resources are limited.

4. User interface

To give responders a simple way to interact with the system, we deployed Open WebUI. We adapted it in our GitHub repository. The interface runs in a browser, which makes the system easy to use in the field.